Author Archive

Voting behavior and accumulation of old votes on CV (I know I need to work on my titles)

Considering the blog here has been inactive for a while I figured I would contribute alittle post. One problem in analyzing historical voting patterns that has come up a few times how it is problematic that older posts have a long time to accumulate more posts. For instance, in my analysis of reputation effects it is problematic that older votes have alonger exposure time. Recently a question on average votes on CV meta reminded me of it as well. I pointed to the fact that the average number of upvotes for questions and answers on the site is 3.2, but that doesn’t take into account how long the questions have been available to be voted on. So I figured I would dig into the data a bit, aided by the Stack Exchange Data Explorer, and look at how votes accumulate over time.

First, lets just look at the distribution of voting after a question in created.

The above chart plots a histogram of the post vote date - post creation date, and bins all votes after one week (this query was resticted to 23,344 posts in 2012 to not reach the SO data explorer limit). We can see here in the histogram that over half of voting is done on the same day the post is created, and over another 20% are voted on the following day. By a week, these historical votes have nearly accumulated 90% of their totals. Below I just arbitrarily assigned posts to during the week or after the week, and graphed vote contributions aggregated weekly over time.

Looking at the trends in the data in cumulative area charts, you can see this slow accumulation over time pretty easily. Also you can see a trend to more voting (partially due to more posts) on the site, but if one just looked at the historical accumulation of total votes it may mask such a trend. Graphing the proportion of votes over time shows an even more striking pattern.

I kind of expected this to show a plateau, but that doesn’t appear to be the case. Probably in the future if I am doing any analysis on historical voting patterns I will likely just chop off votes after a pre-specified time (like a week), but certainly more interesting and useful information can be culled from such voting patterns. In fact, as far as reputation effects are concerned, one could reasonably argue the flattery of having older posts voted up is a type of reputation effect (although in general such things are potentially confounded by a host of other factors).

These graphics open up other interesting questions as well, such as are the historical votes from new members? I encourage all to brush up on some SQL and go check out the data explorer. Maybe we can harass the StackExchange group to implement some more graphs as well (being able to show a histogram would be nice, as well as interactive redefine what variables go on the x and y axis’s for the line charts).

An open call to participation for the Cross Validated Journal Club

One of the community projects we have started at Cross Validated is a journal club. The journal club is mainly intended to engage the community in general discussion related to really any statistical material (either theoretical or applied). In a meta thread we subsequently propose papers (that are publicly available), and then once enough community interest seems to be in place we set a time and invite the author of the article to come and join us in chat.

Because statistical methods are applied to such a wide array of topics, there is really an expansive set of topics the journal club can cover. For prior discussions, one can peruse the journal club tag on the CV meta site, but for a quick round-up here are a few example discussions so far;

- Data confidentiality: A review of methods for statistical disclosure limitation and methods for assessing privacy by Gregory J. Matthews and Ofer Harel

- Douglas N. Arnold, Kristine K. Fowler (2011). Nefarious Numbers. Which involves criticism of impact factor scores for journals.

- A Statistical Analysis Of Multiple Temperature Proxies: Are Reconstructions Of Surface Temperatures Over The Last 1000 Years Reliable? By Blakeley B. McShane and Abraham J. Wyner

I’d like here to encourage both regular cross-validated members and passer-bys from other communities to propose material. In particular, I’d like to note that the proposed material need not be advanced statistical methodology. In fact we probably get more lively discussions when the material is basic enough to allow a wide array of individuals with varying backgrounds to understand the material and participate in general discussion.

So stop on by the current proposal, the seventh Cross Validated journal club, and either vote up the current proposal or propose some more material to discuss!

Increasing Visibility of the CV blog (and why pie charts kind of suck)

A recent question on meta.stats.se has brought up some concerns over how visible the blog is on the site. Here I will present some statistics on site views and referrals over the time of the blogs existence.

First I made a comment in the aforementioned link that the majority of the site traffic so far has not come from the main site. Below is a time series chart of the accumulated site views for the site. I have superimposed blue dots for when an item was posted on the blog, and red dots for when key figures either tweeted or referred to one of our posts in a blog.

I’ve annotated the one huge spike of over 2,000 views in the chart, as the main driver behind that surge was a tweet by Joel Spolsky. Below is the same chart with the Y axis restricted to within the range of more usual site traffic flow.

In general it appears that site traffic sees a slight increase whenever a new post is published. Again I’ve highlighted several key referrals from outside sources that appear to have driven site traffic up that aren’t due to the initial spike from a new post. One from a mention of the Emacs post by David Smith over at the revolution analytics blog, and another mention of the recent tables post by Rob Hyndman on his personal blog. Below I have inserted the table of refferal sites from the wordpress dashboard (site urls are truncated to the first 35 characters).

Of these sources I arbitrarily collapsed them to different categories. So for the grand finale, sit down and have a slice of pie to visualize the proportion of referrals that come from stats.stackexchange.

Just kidding. Pie charts aren’t necessarily awful here in this instance, but it is difficult to tell the difference in sizes among the three categories of stats.stackexchange, key blogs/tweets or other sources. This is trivial task with either a bar chart or a dot plot, and below I have reposted the same data in a bar plot. It is easy there to see that referrals from stats.stachexchange is the smallest among the categories.

Note that these are just referrals that are recorded by wordpress. As of writing this post, there was approximately 6,000 total site views. That means about 20% of the total site views so far are from Tal Galil’s R-Bloggers syndication! The other sources category includes referrals from blog.overflow and other questions on the SE sites, but referrals from the main Stats.SE site should be largely represented within that category. These referrals obviously under-count referrals as well. Twitter is only listed as accounting for a total of around 300 referrals, although the huge spike of over 2,000 views on 8/5/2011 can only be reasonably explained by the mention of Joel Spolsky on twitter.

The reason pie charts suck is that visualizing the angles in slices in pie charts is more difficult than visualizing the length of a line (bar charts) or position of a point in a cartesian coordinate system (dot plots). Frequently bad pie charts are chastised for having too many categories, they are worse than bar charts or dot plots even when they have a small number of categories too! If your being difficult you could perhaps argue that pie charts are still useful because they don’t need a common scale with which to make comparisons between (see the maps of Charles Minard for an example) or that there ubiquity should leave them as an option (as they are so prevalent we have developed a gestalt for interpreting them). I think my response to these critiques would be its refreshing to hear an argument for pie charts that isn’t I like the way they look!

Website analytics is a bit out of my ken, but my speculation from the site traffic and referral statistics is as follows;

- Click throughs to the blog from the main stats site are pretty sad. I don’t know what the average site visits are for stats.se (apparently such info is a secret), and I don’t know what a reasonable number of clickthroughs would be. But I do know averaged over the time period the blog has been in existence, we are averaging around 3~4 referrals from the main stats site to the blog per day. Cats walking on the keyboard and by chance clicking on the link to the blog at the very bottom of the page are perhaps to blame.

- Referrals from outside sources have a much greater overall potential to increase traffic to the site, regardless of how much we improve referrals from the main site.

So where to go from here? Maybe we should just ask Joel to tweet all of our blog posts, or just spam the R-Bloggers feed with all our posts. Being serious though, I would just like to see the community take greater participation in writing posts. I assume that quality content is the best means to attract more visitors to the blog, and along the way we can figure out how to do a better job of integrating the blog with the main site and what the role the blog will take in supplement to the main Q/A site.

That being said I do think that a permanent link to the blog in the header of the main page is a good idea as well (although I have no idea how much traffic overall it will bring). Also all of you folks with twitter accounts (along with other social networking updates) would be doing us a favor by pointing to posts on our blog you think are worthwhile. It could potentially cascade into a much wider audience than we could ever get directly from the Cross Validated main site as well.

The blog is an excellent platform for issues that don’t fit well within the constraint of questions and answers on the main sites, and so I believe it is a useful tool in the dissemination of information that community members agree is important. I’d like here to remind the community that every member of the community is invited to contribute a post to the blog. We undoubtedly need greater involvement from the community though to make the blog sustainable. Several suggested thematic post series have gone unwritten because we need help! Surely more analysis of the wealth of public data in the stack exchange data dump would be of wide interest to the general community as well.

I’ve posted the data used in this post (along with SPSS syntax to produce the charts) in this google code link. Thoughts from the stats community on the topic are always appreciated, and if other communities have advice about promoting their blogs I would love to hear it as well.

Some notes on making effective tables

I realize that information visualization through graphical displays are all the rage these days, but we shouldn’t forget about tables as a means to communicate numerical information. In fact, this post was motivated by a recent paper (Feinberg & Wainer, 2011) that talks about how the frequency of tables (even in The Journal of Computational and Graphical Statistics) is more prevalent than graphical displays (and they vastly outnumber the number of graphical displays in several other fields mentioned).

So, what are some of the things we should keep in mind when making tables?

- Comparisons are easier to other items in closer proximity and comparisons within columns tend to be easier than within rows.

- Ordering the results of the table provides greater readability. If you order the rows by numerical attributes it can even sometimes illustrate some natural groups in the data. Grouping by some pre-defined criteria obviously allows for easier comparisons within groups. Avoid ordering by alphabetical order.

- Limit the number of digits you display (the fewer the better). Especially in the case of some quantity measured with error (such as regression coefficients) it doesn’t make much sense to display any more decimal places than error in the estimate. Although some evidence suggests human memory can handle up to seven digits, most of the literature cited here suggests much fewer (two or three only if possible).

Some other random musings about tables

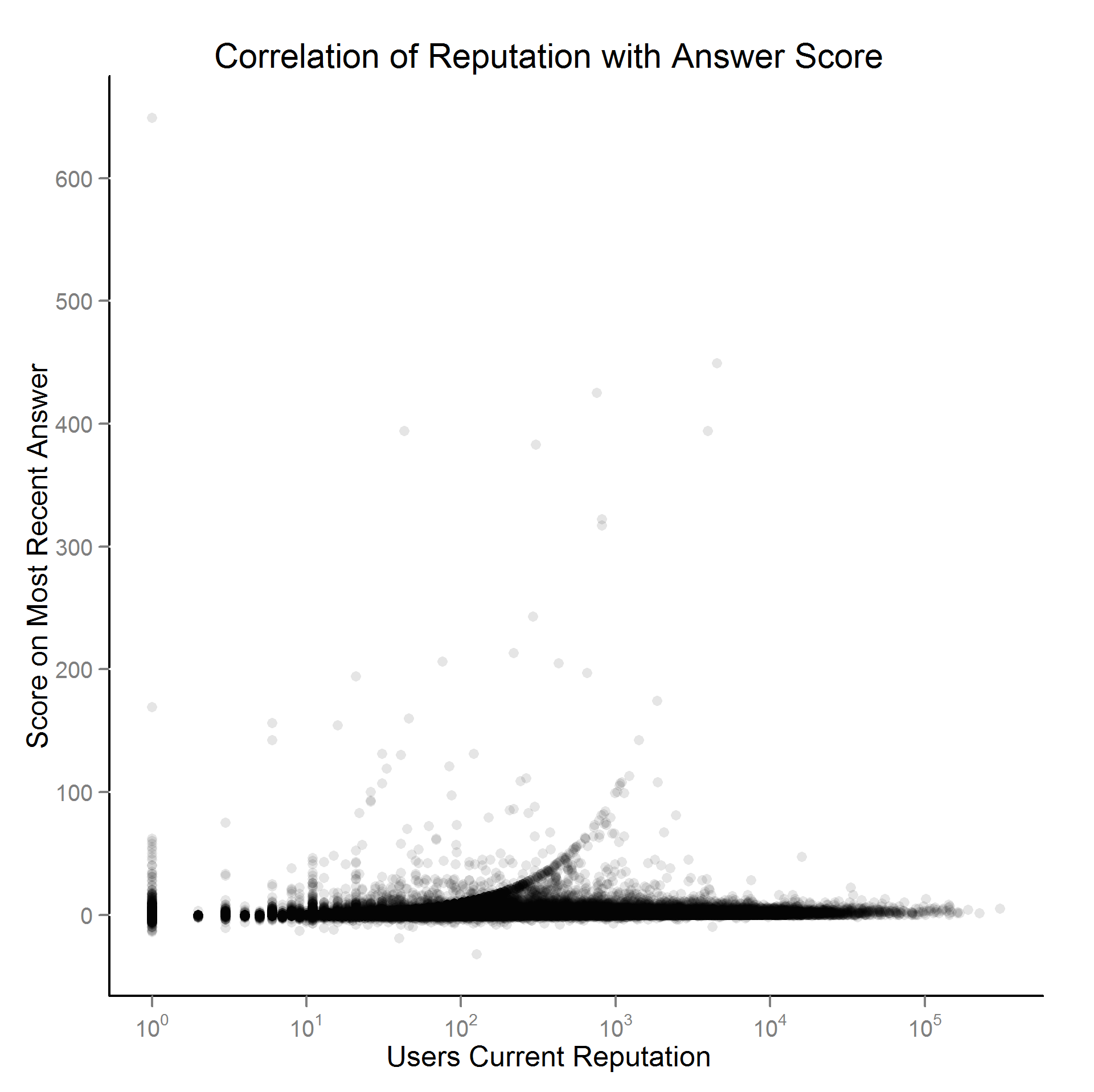

For large numerical lists using an anchor figure allows easier relative comparisons. An anchor item in this instance is frequently some other type of summary statistic that allows one to compare an individual item to a summary of the group of items (for instance the median value of the distribution displayed in the table). Feinberg & Wainer (2011) provide some examples of this, and below is an example table in their article comparing model fit statistics for sequential models.

Coloring the lines in a table is potentially an effective way to distinguish groups of observations (Koschat, 2005), or provide visual cues to prevent transposing of rows. This initially crossed my radar when I saw a thread at the user experience website on zebra stripes, which then subsequently lead me to some research by Jessica Enders that gives some modest evidence of the efficacy of colored lines in tables. Also one of the stat sites members stated he had found some evidence that for data entry colored lines on every third row provided more accurate data input. Although initially I thought such colored lines were at best distracting, I think I am coming around to them (provided they are suttle I think they can still be aesthetically pleasing). See for instance PLoS ONE publications use the every-other line colored grey for tables (Cook & Teo, 2011). Below is an example taken from an article, typesetting tables (from 24ways.org) demonstrating the use of coloring every other line.

Tables and graphs can co-exist, sometimes even in the same space! See for instance Michael Friendly’s corrgrams (Friendly, 2002) that use color to designate size and direction of correlation coefficients which can be superimposed on a table displaying a correlation matrix. One of my favorite examples of this is from a post by chl on visualizing discriminant validity.

Another example of combining tables and graphs in displayed in this post by Stephen Few. Such enhancements to tables should please both the graph people and the table people (Friendly & Kwan, 2011). A trade-off that one should keep in mind though is that too much color (especially darker colors) can frequently diminish the readability of text (Koschat, 2005 mentions this) and vice-versa text can be distracting from the graphical display (Stephen Few mentions this in this short paper on using color in charts in a synonymous situation).

Another combination of graphs and tables is the use of sparklines. Sparklines are very small graphic displays (sometimes they can be referred to as glyphs) that can be inserted directly into text, superimposed onto maps (see this example from the frumination blog, or this current working paper of Hadley Wickham) or of relevance here, within cells of a table. Most examples I have seen are line charts, but other’s might be bar, pie, area charts, or histograms. A good collection of examples can be found at the Sparklines for Excel website, which provide a free add-on tool for making sparklines in Excel. Below is an example taken from another post by chl utilizing the sparkTable package in R to produce a Latex table with sparklines included.

The infothestics blog also has some other examples of sparklines used in tables and other graphics.

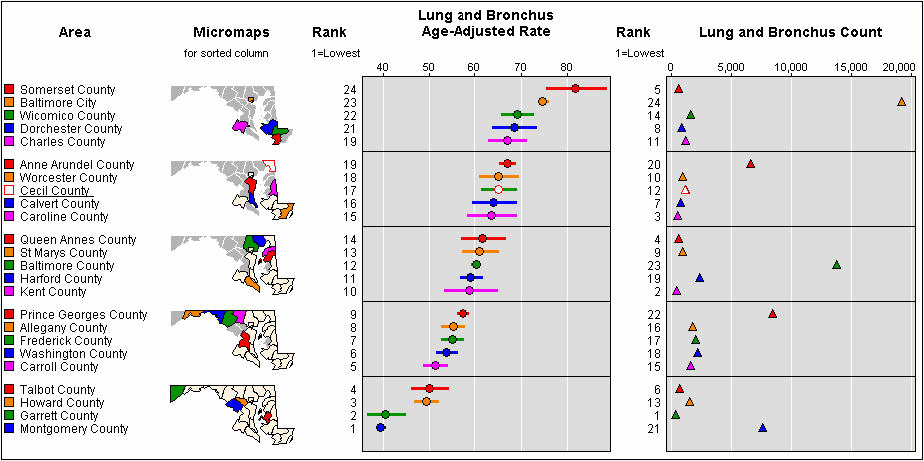

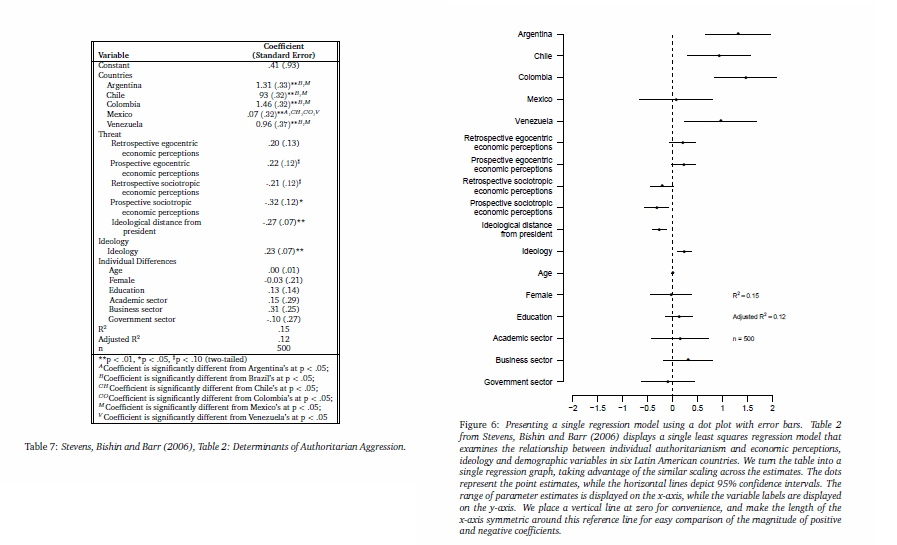

Know when a table is (and is not) appropriate. Much of the criticism of tables is in part directed at tables that present results that are difficult to decode relative to graphical summaries (Gelman et al., 2002; Kastellec & Leoni, 2007; King et al., 2000) or that detecting overall trends in large tables is difficult (for instance see the recent papers of Cook et al., 2011 and Arribas-Bel et al., 2011 on visualizing simulation results). A frequent use case that I come across where graphs are much easier to digest (that hasn’t been mentioned so far in the post) are when people report items in a table in the form of intervals. Telling if two intervals overlap is much simpler in a graphic than it is in a table, as one needs to evaluate two seperate numbers (which will ultimately be in seperate rows and columns) to see if the interval overlaps.

The Kastellec & Leoni (2007) paper has a couple examples of this with confidence intervals for regression coefficients, and one is given below adjacent to the original table of regression coefficients it is representing.

While it is often necessary to use a table, keep in mind potential graphical alternatives that can more effectively communicate your information, and allow more rich comparisons of your numerical summaries to be made.

Frequently WYSIWYG spreadsheet programs, such as Excel or OpenOffice, are used to create tables. But considering this is a statistics site, I suspect many of the readers will be interested in tools directly available in either statistics packages or the typesetting program Latex.

- For R, the packages xtable and apsrtable can produce html and Latex tables.

- For Stata, Ian Watson has created a package, tabout, that exports tables to Latex or html.

- For LaTeX, the online wiki is a great introduction to making tables, and one can peruse the listings on the stat exchange Tex site tagged tables for more examples.

- For SPSS, the additional ctables package might be of interest (although the base functionality for making many tables is quite extensive), as well as the Modify Tables and Merge Tables python extensions listed on the Developerworks forum.

Also examining the wiki’s or websites for such packages frequently gives good examples of table usage as well.

Citations & Other Suggested Readings

Arribas-Bel, Daniel, Julia Koschinsky & Pedro Amaral. 2011. Improving the multi-dimensional comparison of simulation results: A spatial visualization approach. Letters in Spatial and Resource Sciences Online First. Ungated pre-print PDF

Cook, Alex R. & Shanice W. Teo. 2011. The communicability of graphical alternatives to tabular displays of statistical simulation studies. PLoS ONE 6(11): e27974. PDF available from publisher.

Feinberg, Richard A. & Howard Wainer. 2011. Extracting sunbeams from cucumbers. Journal of Computational and Graphical Statistics 20(4): 793-810. PDF available from publisher.

Friendly, Michael & Ernest Kwan. 2011. Comment on Why tables are really much better than graphs. Journal of Computational and Graphical Statistics 20(1): 18-27. PDF available from publisher.

Friendly, Michael. 2002. Corrgrams: Exploratory displays for correlation matrices. The American Statistician 56(4): 316-324. PDF preprint, Related R package

Gelman, Andrew, Cristian Pasarica & Rahul Dodhia. Let’s practice what we preach. The American Statistician 56(2): 121-130. Ungated PDF

Kastellec, Jonathan P. & Eduardo Leoni. 2007. Using graphs instead of tables in political science. Perspectives on Politics 5(4): 755-771. Ungated pre-print PDF

King, Gary, Michael Tomz & Jason Wittenberg. 2000. Making the most of statistical analysis: Improving interpretation and presentation. American Journal of Political Science 44(2): 347-361. Ungated PDF

Koschat, Martin A. 2005. A case for simple tables. The American Statistician 59(1): 31-40.

This thread on stats.se, What is a good resource on table design? lists these and various other references for constructing tables. Got any examples of exemplar tables (or other suggested resources)? Share them in the comments!

ps – Special thanks for those Crossvalidated folks whom have helped to contribute and provide examples for the article, including chl, Mike Wierzbicki and whuber.

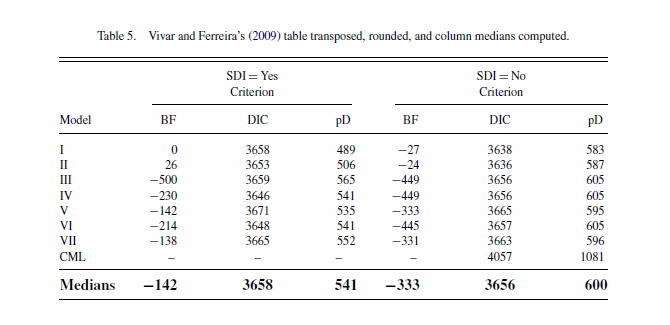

AndyW says Small Multiples are the Most Underused Data Visualization

A while ago a question was asked on stackoverflow, Most underused data visualization. Well, instead of being that guy who crashes the answering party late, I will just use this platform to give my opinion. Small Multiples

For a brief intro on what small multiples are see this post on the Juice Analytics blog, Better Know a Visualization: Small Multiples. But here briefly, small multiple graphs are a series of charts juxtaposed in one graphic (sometimes these are referred to as panels), that have some type of common scale. This common scale shared by the panels allows one to make comparisons between elements not only within each panel but between the panels.

I know small multiples aren’t per se a data-visualization. Maybe it could be considered a data visualization technique, which can be applied to practically any type of chart you can think of. I also don’t have any data to say it is underused either. I’ll show a few examples of small multiples here in this post, and most contemporary stat software has the capability to produce them, both suggesting it is not underused. But, I believe small multiples should be a tool we use frequently for data visualization based on the wide variety of situations in which they can be usefully applied.

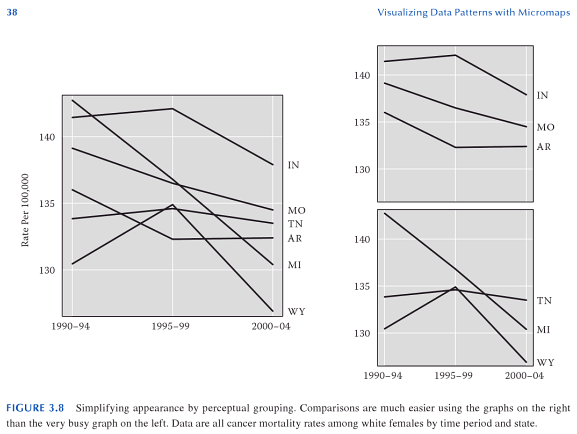

The problem

It is quite easy for one chart to become either too busy with elements that it becomes very difficult to interpret a graphic, or that over-plotting of the elements occurs (i.e. objects within the chart overlap). I think a great example of this is demonstrated in Carr and Pickle (2009) (originally taken from Elements of Graph Design by Stephen Kosslyn). In the example below, we can see that simply reducing the number of line elements within each chart allows the analyst to get a much better sense of each of the lines, and how they relate to one another, much more so than in the original chart with 6 elements all in one.

Other frequent uses of small multiples are to graphically explore relationships between subsets of the data (for an example of this, see this post of mine over on Cross Validated) , or multiple variables (such as in a scatterplot matrix).

Some random things to keep in mind when making small multiples

Maximize color contrast to be able to distinguish patterns in smaller displays.

Because of the reduced real-estate used for each panel in the graphic, more nuanced changes (such as slight changes in color saturation or hue) are harder to perceive. Increasing the contrast between different color categories, using dichotomous color schemes, or only displaying certain sets of the data are a way to acheive a greater amount of contrast. Richie Cotton on the original SO post pointed to a good example of this for Horizon Graphs. Also an example of small multiple maps by Andrew Gelman which uses a dichotomous color scheme when mapping attributes to U.S. States is another good example. An example of only displaying certain sets of the data can be found by examining linked micromaps

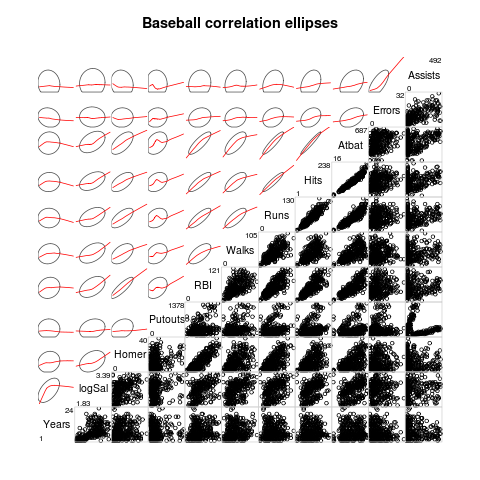

Because of the small space, reducing information into key patterns can be useful.

A good example of what I mean here is for large scatterplot matrices only plotting the bivariate elipses and a loess smoother instead of all the points (see Friendly, 2002). Below is an example taken from the R Graphical Manual

The same type of smoothing and plotting bounds on data can be extended to many line charts as well.

My last bit of advice is don’t expect to only make one graph. But feel free to stop by at Cross Validated for some suggestions if you get hung up on a tough data visualization task.

AndyW

References for Further reading on Small Multiples, and their benifits

Carr, Daniel & Linda Pickle. 2009. Visualizing Data Patterns with Micromaps. Boca Rotan, FL. CRC Press.

Cleveland, William. 1994. The Elements of Graphing Data. Summit, NJ. Hobart Press.

Friendly, Michael. Corrgrams: Exploratory displays for correlation matrices. The American Statistician 56(4): 316-324.

Tufte, Edward. The Visual Display of Quantitative Information. Chesire, CT. Graphics Press.

Let me know in the comments if you have any suggested references, or your favorite examples of small multiples as well!

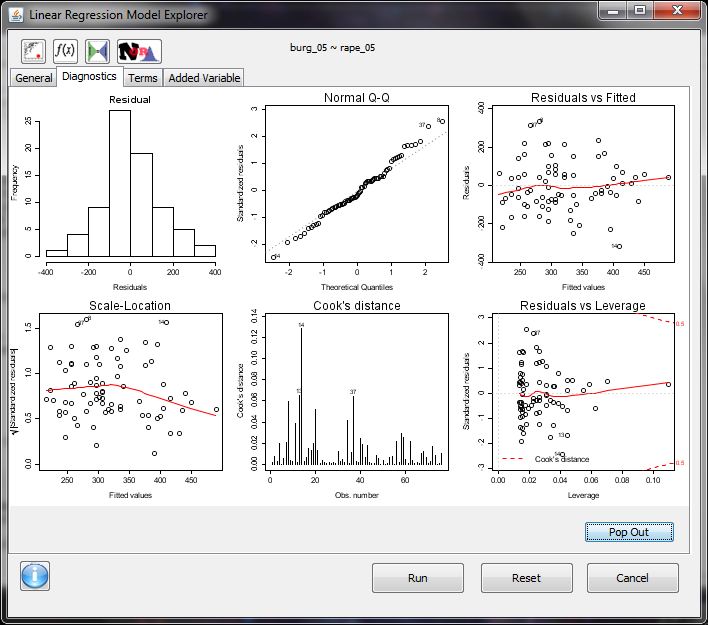

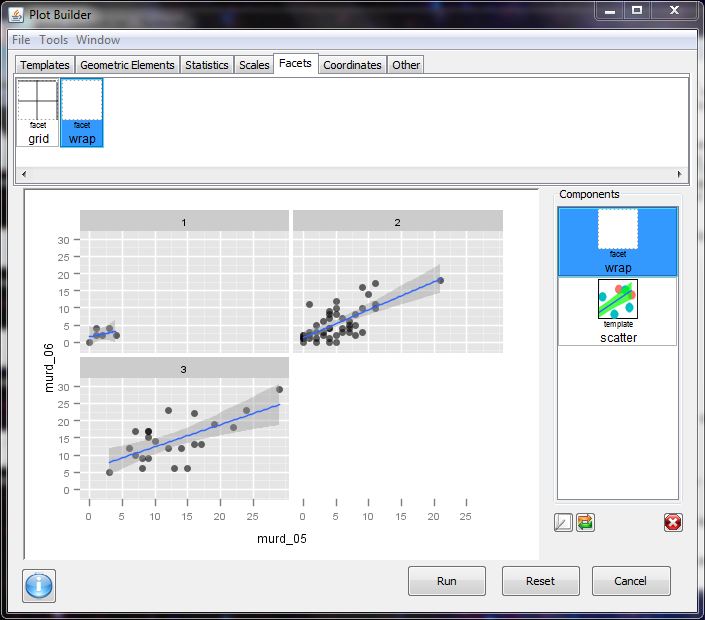

Using Deducer to work with R

If one checks out the initial question that prompted this series, a common theme in the answers is that one should use the GUI as a tool to help one build code (and not just as a crutch to do the analysis). Being able to view the code produced by the GUI should help beginner R users learn the commands (and facilitate scripting analysis in the future). The following blog post highlights one of the popular GUI extensions to R, the Deducer package, and my initial impressions of the package.

It is obvious the makers of the Deducer package have spent an incredible amount of time creating a front end GUI to build plots using the popular ggplot2 package. While it has other capabilities, it is probably worth checking out for this feature alone (although it appears R-Commander recently added similar capabilities as well).

Installation: Long story short, on my Windows 7 laptop I was able to install Deducer once I updated to the latest version of R (2.13 as of writing this post), and installed some missing Java dependencies. After that all was well, and installing Deducer is no different than installing any other package.

What does Deducer do? Deducer adds GUI functionality to accomplish the following tasks:

- load in data from various formats (csv, SPSS, etc.)

- view data + variable types in separate data viewer

- conduct data transformations (recode, edit factors, transformations, transpose, merge)

- statistical analysis (mean differences, contingency tables, regression analysis)

- A GUI for building plots using the ggplot2 package

Things I really like:

- data viewer (with views for spreadsheet and variable view)

- ability to import data from various formats

- regression model explorer

- interactive building of plots (the ability to update currently built plots is pretty awesome)

Minor Quibbles:

- I would like all of the commands to open in their own call window, not just plots (or be able to route commands to an open (or defined script window) ala log files.

- I am unable to use the console window if another deducer window is open (e.g. data view, regression model explorer).

Overall I’m glad I checked out the package. I suspect I will be typing library(Deducer) in the future when I am trying to make some plots with the ggplot2 package. The maintainers of the package did a nice job with including a set of commands that are essential for data analysis, along with an extensive set of online tutorials and a forum for help with the software. While the code Deducer produces by point and click is not always the greatest for learning the R language, it is a start in the right direction for those wishing to learn R.

Does Jon Skeet have mental powers that make us upvote his answers? (The effect of reputation on upvotes)

Of course since we all know Jon Skeet does have various powers, I will move onto unanswered questions, whether a users reputation makes them receive more upvotes for answers. I’ve seen this theory mentioned in multiple places (see any of the comments to Jon Skeet’s answer that are along the lines of “If this was posted by someone other than Jon Skeet, would this have gotten as many upvotes?”). It is similar to the question we were supposed to address in the currently dormant Polystats project as well.

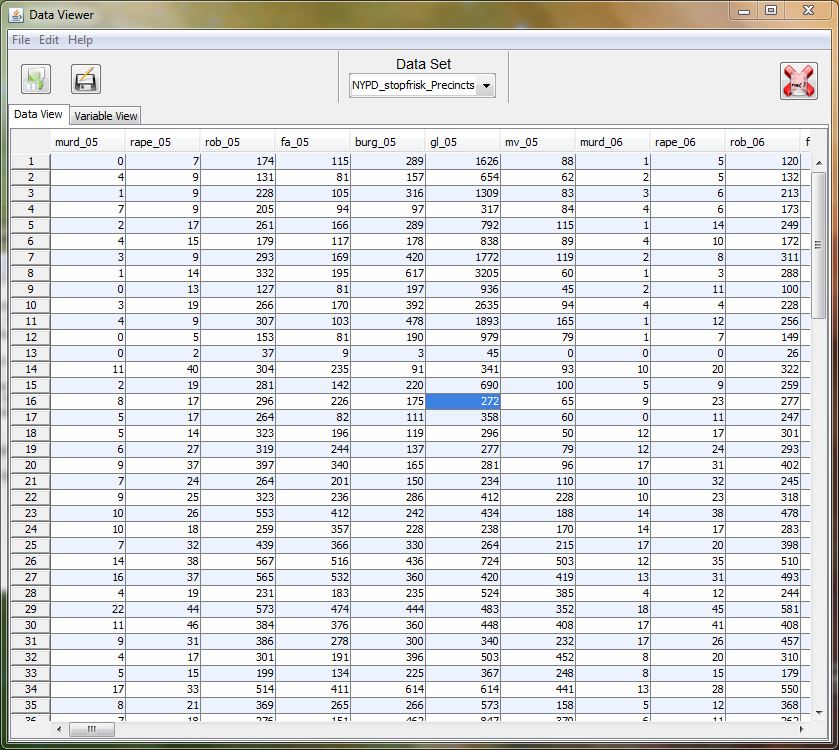

Examining user and post data from the SO Data Dump, first I looked at the correlation between users reputation and their most recent posts. Below is a scatterplot, with the users current reputation (as of the June-2011 data dump) on the x-axis and the current score on their most recent non community wiki answer on the y-axis.

One can discern a very slight correlation between reputation and the score per answer (score = upvotes – downvotes). While consistent with a theory of reputation effects, an obvious alternative explanation is simply those with higher reputation give better answers (I doubt they bought their way to the top!). I’m sure this is true, but I suspect that they always gave higher quality answers, even before they had their high reputation. Hence, a natural comparison group is to assess whether high rep users get more upvotes compared to answers they gave when they did not have as high reputation.

To assess whether this is true, below I have another scatterplot. The x-axis represents the sequential number of the non community wiki answer for a particular user, and the y-axis represents that post minus the mean score of all of the users posts. The scatterplot on the top is all other SO users with a reputation higher than 50,000, and the scatterplot on the bottom is Jon Skeet (he deserves his own simply for the number of posts he has made as of this data-dump, over 14,000 posts!)

In simple terms, if reputation effects existed, you would expect to see a positive correlation between the post order number and the score. For those more statistically savvy, if one were to fit a regression line for this data, it would be referred to as a fixed effects regression model, where one is only assessing score variance within a user (i.e., a user becomes their own counter-factual). Since the above graphic is dominated by a few outlying answers (including one that has over 4,000 up votes!) I made the same graphic as above except restricted the Y axis to between -10 and 25 and increased the transparency level

As one can see, there is not much of a correlation for Jon Skeet (and it appears slightly negative for mortal users). When I fit the actual regression line, it is slightly negative for both mortal users and Jon Skeet. What appears to happen is there are various outlier answers which garner an incredibly high number of upvotes, although these appeared to happened for Jon Skeet (and the other top users) even in their earlier posts. It seems likely these particular posts attract a lot of attention (and hence give the appearance of reputation effects). But it appears on average these high rep users always had a high score per answer, even before they gathered a high reputation. Perhaps future analysis could examine if high rep users are more likely to receive these aberrantly high number of upvotes per answer.

This analysis does come with some caveats. If reputation effects are realized early on (like say within the first 100 posts), it wouldn’t be apparent in this graph. While other factors likely affect upvoting as well (such as views, tags, content), these seem less likely to be directly related to a posters reputation, especially by only examining within score deviations. If you disagree do some analysis yourself!

I suspect I will be doing some more analysis on behavior on the Stack Exchange sites now that I have some working knowledge of the datasets, so if you’ve done some analysis (like what Qiaochu Yuan recently did) let me know in the comments. Hopefully this revives some interest in the Polystats project as well, as their are a host of more things we could be examining (besides doing a better job of explaining reputation effects than I did in this little bit of exploratory data analysis). Examples of other suggested theories for voting behavior are pity upvotes, and sorting effects.

For those interested, the data manipulation was done in SPSS, and the plots were done in R with the ggplot2 package.